Google’s most recent update is a huge leap forward in machine learning, but it's interesting for another reason: we can use it to predict what Google will do next. With that knowledge, you can stay ahead of the digital marketing trends of 2020 and gain an edge on your competitors. Find out more about BERT, what it says about the future of search, and what we can expect from ERNIE.

What is BERT?

BERT is the latest in a long line of Google algorithm updates. It describes a method used to train and prepare machine learning technology to better understand the language used by a Google Search user. The update looks at how words in a search query relate to each other, rather than just looking at the individual words.

By looking at the distance between words, with special attention to linguistic elements like conjunctions and prepositions, the result is a clearer idea of context. This represents a big advancement in a field called Natural Language Processing (NLP) - an area that’s concerned with how humans and machines (such as computers) interact using natural human language.

And if you’re wondering what BERT stands for, it’s Bidirectional Encoder Representations from Transformers. Don’t worry if this looks like word soup to you. You aren’t alone.

What’s the big deal?

The most important thing to know is that BERT is helping Google understand the meaning of search queries and improve the relevance of information served in the Search Engine Results Pages (SERPs).

When first rolled out to the USA in November 2019, the update projected a major impact to one out of ten search queries made in the USA version of Google. For context, BERT has since been deployed in over 70 languages, including in the UK.

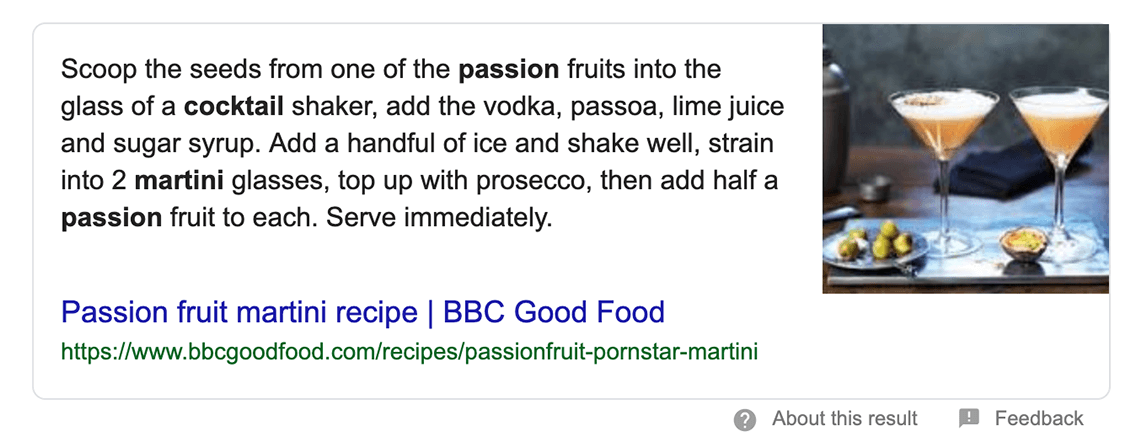

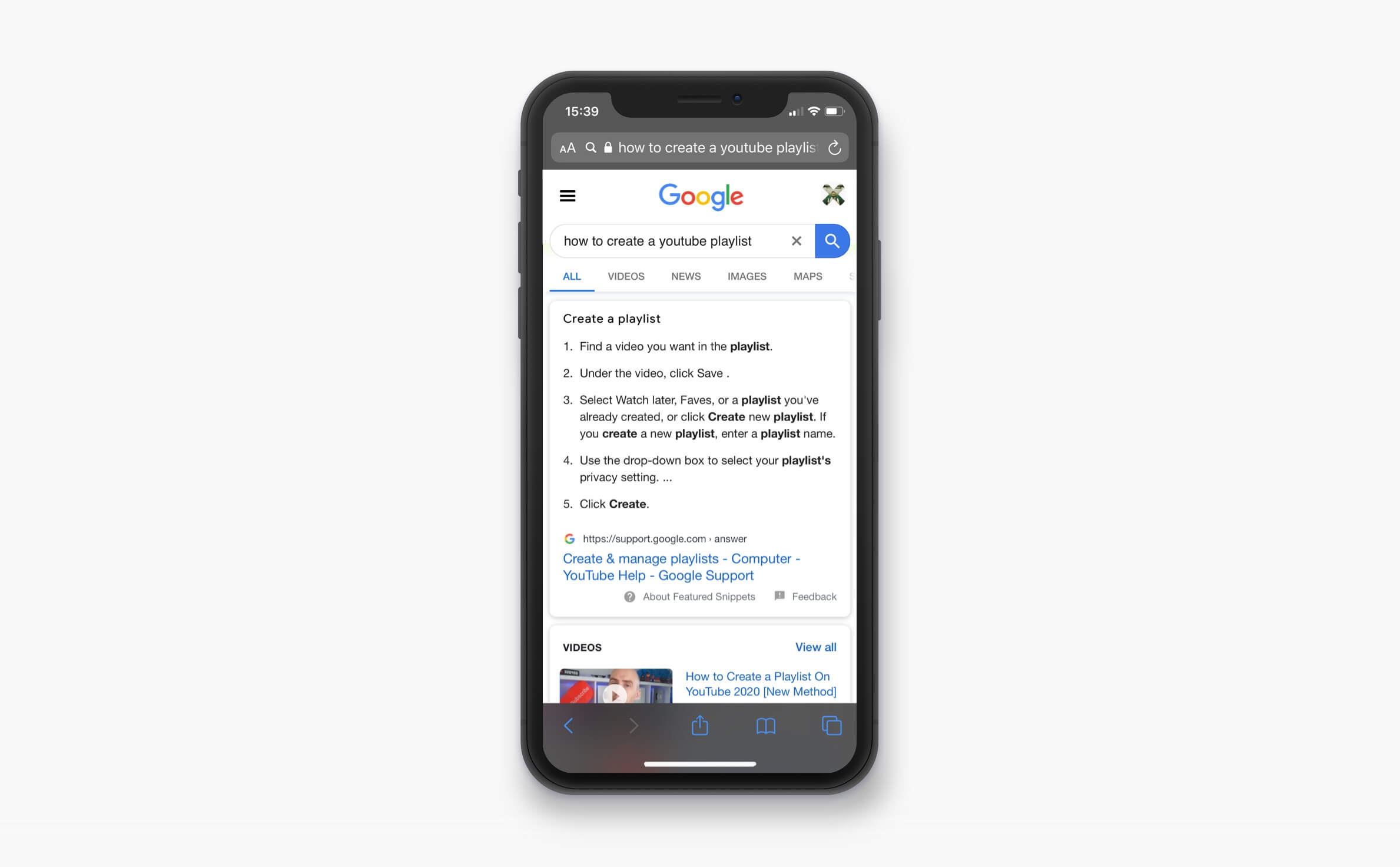

Affected most are “long-tail” search queries, consisting of longer-form questions and sentences. In turn, impacts are seen in the area of featured snippets (also known as ‘answer boxes’, ‘knowledge graphs’ or ‘direct answers’).

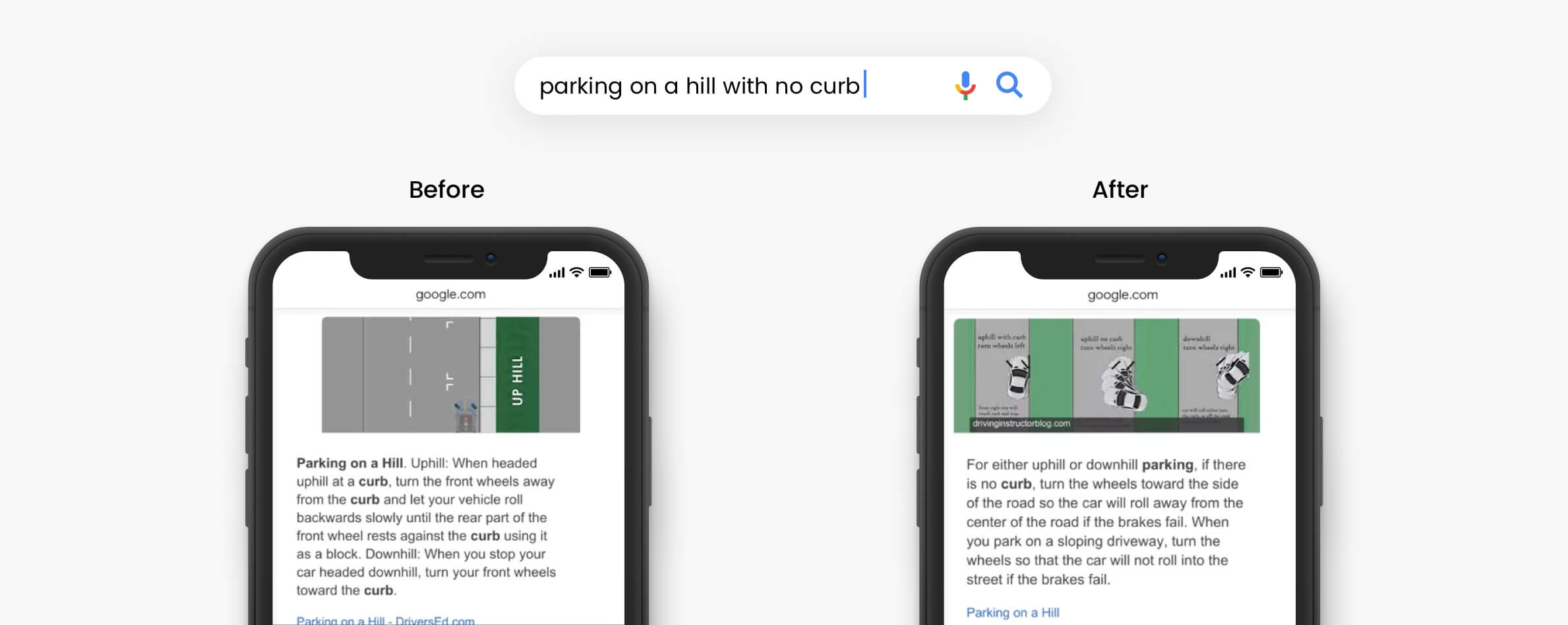

To illustrate how BERT works, Google has provided a few examples of how the update helps to serve the most relevant answers to its users.

In this instance, the featured snippet for the search query ‘parking on a hill with no curb’ transforms under BERT’s influence.

It’s clear that Google was previously placing a lot of emphasis on the word “curb”, ignoring the “no” despite it being a crucial aspect of the user’s query. BERT, however, has a much clearer understanding of what that “no” means in context. As a result, the answer served is much more appropriate.

How will BERT affect you?

Inevitably, there will be some flux. Websites that used to be the #1 result might see their position change and new featured snippets will take their place. Fortunately, this is a positive rebalancing, and there should be very few downsides for search users or website owners.

The good news is that, officially, there’s nothing you should need to change.

Do you engage in proper keyword research, with a data-led content strategy? Do you have good results and a determined focus on your users? Then there should be no rush to revolutionise your processes.

However, acknowledging that Google is going to be better at understanding user intent through language, there may be a few things to keep in mind:

Featured snippets aren’t going away

With the rise of mobile device and voice search users, featured snippets remain Google’s main method of serving quick answers to users. Optimize for these snippets if and where you can, as they are likely to be increasingly valuable for driving traffic and brand awareness!

The decline of ‘keyword-ese’

With BERT able to understand the intent in a text string, both Google users and businesses can be more confident about writing naturally online. Particularly for longer-form queries, it should no longer be necessary to manipulate keywords and syntax to generate the right results.

Focus on making users happy

Continue creating content that meets the expectations of your users and satisfies their intent - answering questions clearly and directly. If formatted correctly, Google should continue to reward you with high SERP rankings and featured snippets.

What comes next? The ERNIE to Google’s BERT

The search industry moves fast. So fast that some may already consider BERT a bit long-in-the-tooth.

Sadly, the chances of seeing an update with an ‘ERNIE’ acronym are slim-to-none.

However, we can still use what we know about BERT to explore what the next significant rollout from Google might look like.

Natural progression: voice search

Speech recognition is another key aspect in the field of Natural Language Processing. It’s also fair to say that BERT is already targeted at understanding voiced queries made through mobile phones, voice and home assistants (queries which are normally of the long-tail and conversational variety).

Meanwhile, the digital marketing world has been waiting for years to see voice search become the world-changing technology that’s been predicted.

It’s possible that, as Google continues to train BERT and becomes more confident in its ability to determine meaning and intent, voice search will see a massive official update. For instance, we may, at last, have access to more tracking tools and hard data around voiced queries.

Alternatively, we may see featured snippets and other direct answers expanded into the SERPs for shorter-tail keywords where Google has a clear idea of the user’s intent (e.g. “bus times”).

A patent registered by Google also hints that voice assistants will, in future, collect data around voiced commands and linguistic habits to analyse the user’s needs, becoming a more “proactive” participant in daily life.

Related development: image processing and Google Lens

Another patent registered by Google hints at big developments in the area of image processing.

Notably, it describes a process where a user could ask their device: “what is in this image?” Google’s systems would then be able to directly identify individual sub-items, locations and entities within a given image.

Through captcha (or reCATCHA) gateways found all across the web, Google has already been hard at work training its AI in image recognition. If you’ve ever used Google Lens to perform a reverse image search online, you may know that Google is already pretty good at matching your photo data to visually-similar items. So, it’s plausible that ERNIE might represent a similarly large leap in how images function in the online world.

Another Great Leap: Structured Data and Entities

You may have noticed the mention of entities, relating to image search.

If you don’t know, an “entity” to Google is essentially a thing - an animal, person, object, product, or even a company.

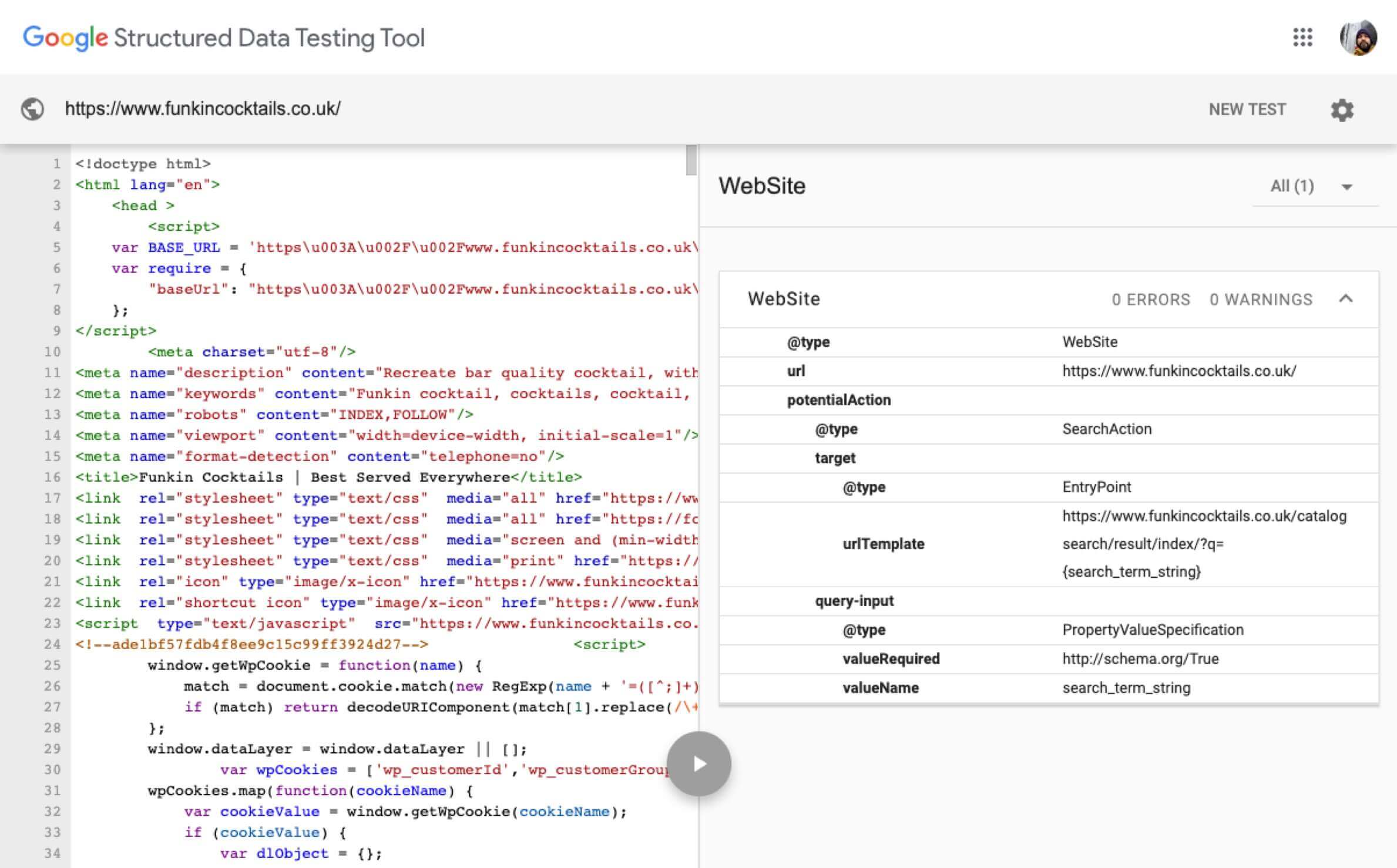

However, how exactly Google identifies entities - and public attitudes towards them - remains largely obscure. If we know anything, though, it’s that structured data (or schema mark-up) is a key tool in helping search engines to understand and distinguish between “things”.

Recent updates from Google have allowed companies to claim SERP features related to their company entity, such as Knowledge Graphs. Similar features are also being tested in India. ERNIE could be an advancement in this area, giving the transparency and tools needed to manage the online presence of entities you’re connected to. Along these lines, maybe the accuracy and validity of your structured data will become a factor in your Google rankings, one day.

Staying ahead

We all know Google’s algorithm updates can be unpredictable. However, at Selesti, we expect you’ll see a combination of the above deployed (or at least developed) over the coming years - whether by increments or as significant updates similar in scope to BERT.

Want to talk about BERT, featured snippets, user intent, or the future of Search? Get in touch with one of our experts and we’ll help where we can.